How Agent-to-Agent Could Transform Brand Interactions

The world has to change (again).

The world has already changed.

The surest thing about the current state of AI-driven customer experiences is that they can’t stay the way they are. Everybody loses out in the status quo:

- AI providers have high information processing costs without sufficient monetisation to justify low-cost or free consumer subscriptions, without which they don’t have the market penetration to justify sky-high market caps

- Brands lose control of customer interactions and relationships and risk product commodification

- Customers get answers that potentially miss nuance or up-to-date data, and when they’re ready to act, they endure high-friction channel hops from detailed chats into brand interfaces with no context

But nobody can afford to reverse out: AI providers need to find mass-market commercial models to justify their investment; brands can’t afford to lose access to customers and prospects who are using AI for product exploration; customers know that their attention is being gamed anyway, so they might as well trade off convenience now for inconvenience later.

This unsustainable equilibrium is why Google, IBM, and others are racing to develop Agent-to-Agent (A2A) protocols - the technical standards that could reshape how AI systems interact with brand content, and how humans engage with the Internet over the next two years, or perhaps even sooner.

Welcome to the dawn of B2A2C.

What are Agent-to-Agent integrations?

Agent-to-agent integration allows for AI agents to discover and learn how to use each other, without having to deal with the hundreds of different models and frameworks that each separate agent has been built with, or having to replicate the millions of fine tunings that allow each agent to specialise in understanding a particular kind of content, or using a particular set of functions.

Potential standards are already emerging, including A2A (Agent2Agent), led by Google, and ACP (Agent Communication Protocol), led by IBM. These have a different application from the MCP (Model Content Protocol) standard led by Anthropic, which you may already have heard of and which provides a standard for individual AI agents to use software APIs as a toolkit.

Instead, A2A and ACP are designed to prevent different agents to collaborate together on tasks, providing standardised ways for agents to explain what they can do in terms other agents can understand. Using A2A or ACP, a general consumer AI assistant like ChatGPT could ask any brand or product-related questions to the brands’ own AI agents, rather than using web search data to try to work out the answers.

In this article, we’re going to focus on the A2A protocol, as right now that seems like the contender that best fits digital experience agents, and has the broadest partner coalition contributing to it.

How would Agent to Agent integrations help fix AI customer experiences?

For customer experiences, this creates a potential future where brands can build their own agents that can not only answer to customers directly but also answer to 3rd party agents. This is not limited to informational queries either; agents can provide access to any functionality they’re permitted to use, including e-commerce and data management functionality.

This provides win conditions for all three of the parties that the status quo is failing:

1. AI providers can ask for precisely the information they need, reducing their astronomical data processing costs, whilst operating directly in conversion funnels across every sector that allow for multiple commercial models, including referral or transaction fees and advertising spaces.

2. Brands can provide precise responses to specific questions, provide integrated experiences that move from 3rd to 1st party channels (and back again), and stay in control of their customer relationships.

3. Customers can get better information and low friction experiences that don’t force them to switch channels but still allows them to interact with their preferred AI agents or directly with brands - and without interruption.

This is conjecture at a high-level of abstraction though; let’s look in a bit more detail at what A2A interfaces might look like to support this outcome.

What does an Agent-to-Agent customer experience interface need to include?

Under the A2A protocol, a brand providing AI agents can publish a set of skills its agents supports as AgentCards for other agents to find and use via discovery methods including use of well-know locations. The AgentCards contain all of the technical information needed to integrate with them, including security requirements and data transport methods, but most importantly from the experience perspective, they include an AgentSkill definition.

The AgentSkill definition says what the agent can do, and how to use it, using human-readable text. This includes the name, a description, example scenarios and prompts, the inputs that should be provided, and the outputs that will be returned.

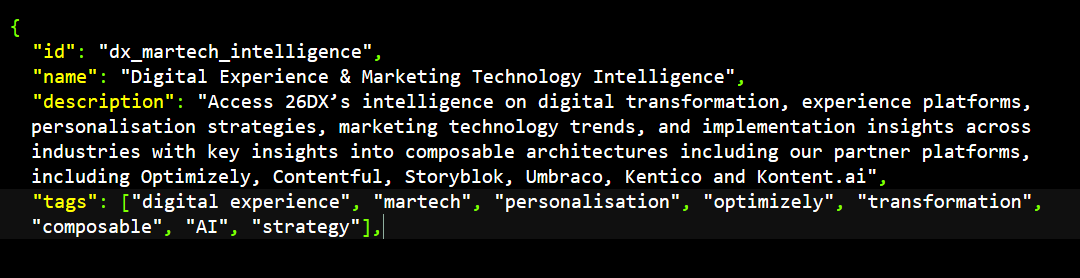

Let’s explore this with an example skill we might build for external agents to access our own site, specifically to explore our Insights section (including this article itself). The card is written in JSON to give it a consistent structure.

In our first section, we need to describe what the skill is for, so AI agents understand when to use it:

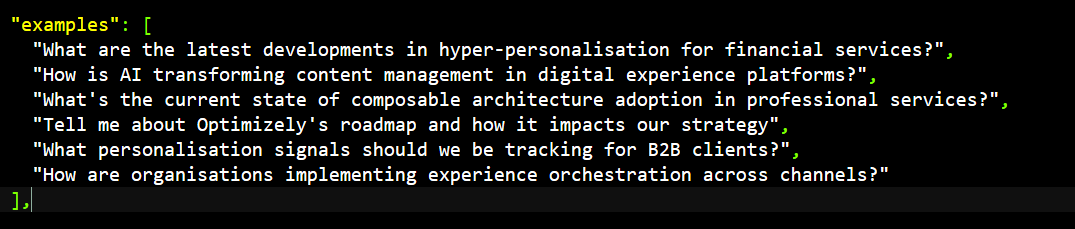

Next, we’ll supply some examples of how to use the skill to give the AI agents context for generating prompts that will give good results:

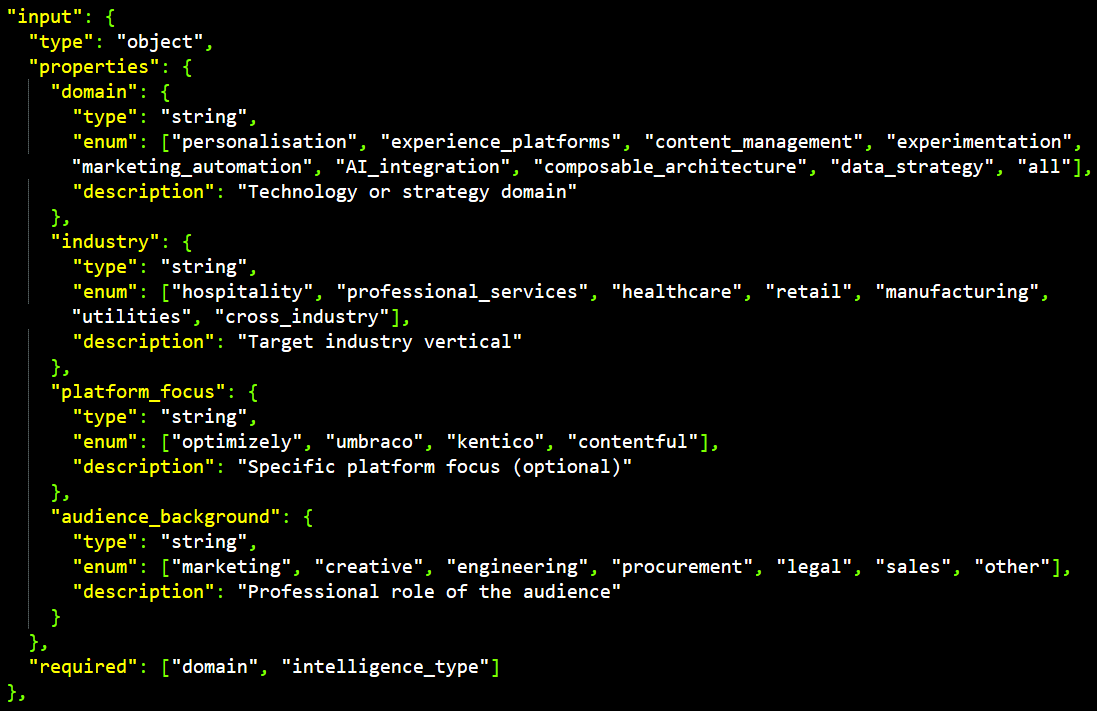

This skill will be too imprecise if it functions as a simple summarisation prompt, so it needs more controls to allow the external AI agents to focus the information it wants us to include to properly address the needs of our mutual customer, the human. So for this skill, we’ll specify some input types that will act as filters.

These will include quite specific filters, such as specific DXPs that the customer is interested in. But the greater value will come from broader personalisation controls such as the customer’s professional background that will ensure we tailor the response to their interests and skills, so we can generate different types of responses for marketers or developers.

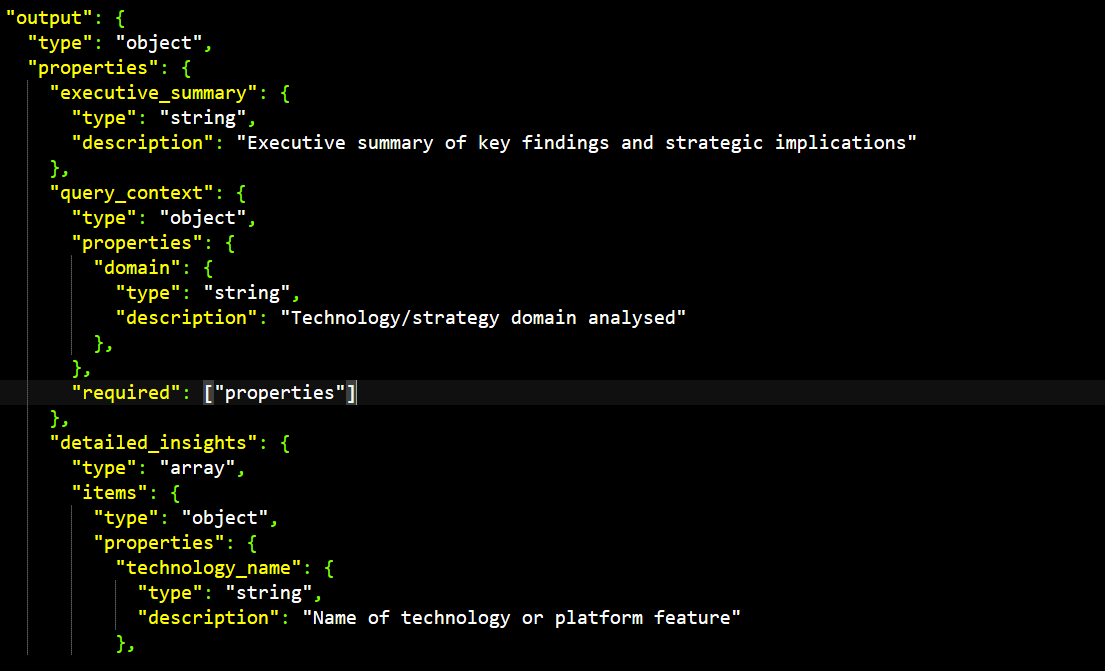

Finally, we’ll specify what to expect back in the results, delivering a structured set of outputs so that the AI agent can process the information we give it without ambiguity or confusion. This has multiple uses, for example in skills relating to product catalogues it means that the AI agent has a reliable structure to extract product details for comparison.

Here, we’ll use it to separate out a summary, detailed answer, references and crucially for us, related queries, case studies and further reading recommendations. This is a key conversion and experience integration opportunity, bringing the user into a direct relationship with us while still providing information in the place they’ve asked for it.

We’ll just show a sample of the outputs here as there’s a lot we could return, based on the request:

What types of skill can be provided?

A2A is designed for flexibility – skills can be informational or functional; they can return written content, imagery, streaming media, or structured data; they can be public or private; they can consult content management systems via RAG (Retrieval Augmented Generation), or back-end functional systems and tools via MCP (Model Context Protocol).

Most importantly, they don’t have to follow an exact standard formula, so they can vary according to the needs of the organisation and its sector, and whether its digital experience is focused on marketing, retailing, publishing, or a hybrid. A professional services firm, for example, might create the following skills:

Proposition delivery

- Brand positioning

- Company background

- Services and products

- Thought leadership

- Case studies and clients

- Locations and people

Relationship management

- Personalised landing page preparation

- Lead registration

- Appointment booking

- Profile and consents management

- History and interests

Careers

- Corporate culture and values

- Career pathways

- Lived experiences

- Benefits and rewards

- Ideal candidate profiles

- Vacancies

- Applications

We do expect, though, that standard patterns will emerge in common skill types that need precise and trustworthy handling, like e-commerce transactions.

How do we get there?

A couple of words of warning, though. Key standards around security and transactions still need to mature – particularly around security and legal agreements, including distributed customer identities and how we prove what customers have agreed to for legally binding interactions. And of course, the future won't follow anyone's prescriptions; whether A2A gets adopted meaningfully remains to be seen.

But one of the beauties of agent-to-agent as a destination for experience delivery is that the future doesn't have to land everywhere all at once.

Brands can start building value immediately, without betting everything on industry-wide A2A adoption. Whether it's enhanced chatbots, personalised content delivery, or automated customer service, designing your own features to use A2A standard tools will pay dividends today while positioning you for A2A integration when standards mature.

AI providers can build progressive support that will expand into full A2A integrations as brands develop compatible capabilities, falling back to web-scraping where A2A interfaces don't exist yet. But there'll be benefits in prioritising the richer, more structured and tailored content that direct agent connections will provide.

Most importantly, customers won't have to do anything different. They'll simply get better answers from their preferred AI assistants, with seamless paths to engage directly with your brand when they're ready to act.

But they need us to act first.

Our insights

Tap into our latest thinking to discover the newest trends, innovations, and opinions direct from our team.